June 25, 2013

Physicists working at the National Institute of Standards and Technology (NIST) and the Joint Quantum Institute (JQI) are edging ever closer to getting really random.

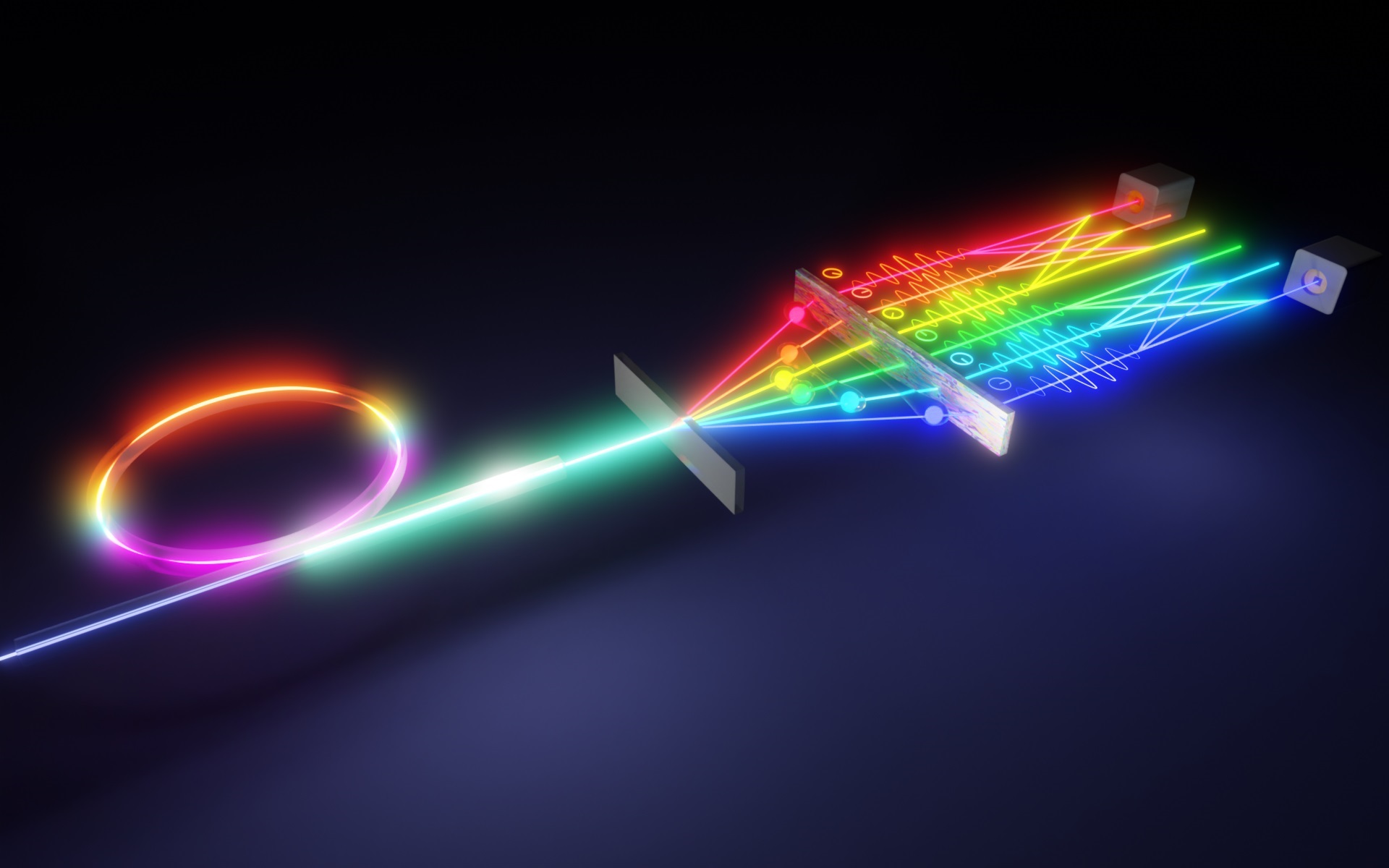

Their work—a source that provides the most efficient delivery of a particularly useful sort of paired photons yet reported*—sounds prosaic enough, but it represents a new high-water mark in a long-term effort toward two very different and important goals, a definitive test of a key feature of quantum theory and improved security for Internet transactions.

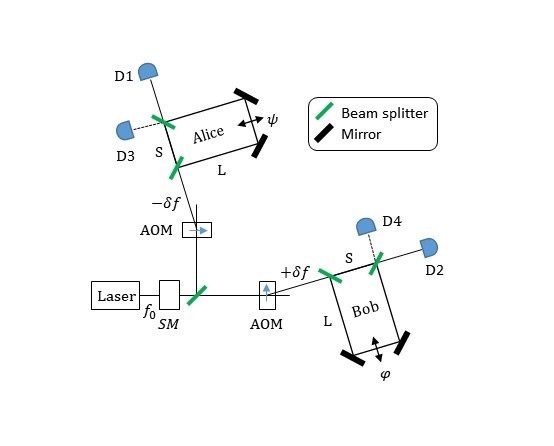

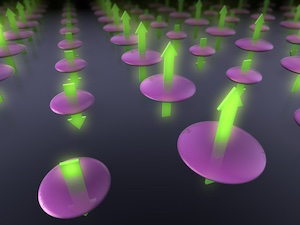

The quantum experiment at the end of the rainbow is an iron-clad test of Bell's inequality. The Irish physicist John Stewart Bell first proposed it in 1964 to resolve conflicting interpretations of one of the stranger parts of quantum theory. Theory seems to say that two "entangled" objects such as photons must respond to certain measurements such as their direction of polarization in a way that implies that each knows instantaneously what happens to the other. Even if they're so far apart that that information would have to travel faster than the speed of light.

One possible explanation is the so-called "hidden variable" idea. Maybe this behavior is somehow wired into the photons at the moment they're created; the results of our experiments are coordinated behind the scenes by some unknown extra process. Bell, in a clever bit of mathematical logic, pointed out that were this so, evidence of the unknown variable would show up statistically in our measurements of such entangled pairs—Bell's inequality.

"Simply put," explains NIST physicist Alan Migdall, "we determine the values for each outcome according to this relation and add them up. It says we can't get—in this version—anything greater than two. Except, we do."

The experiment has been run over and over again for years, and the violation of Bell's inequality should put hidden variables to rest, but for the fact that no real-world experiment is perfect. That's the source of the Bell experiment "loopholes."

"The theorists are very clever and they say, okay, you got a number greater than two but your detectors weren't a hundred percent efficient, so that means you're not measuring all the photons," says Migdall, "Maybe there's some system that has some way of controlling which ones you're going to get, and the subset you detect gives that result. But if you had measured all of the photons you would not have gotten a number greater than two."

That's the experimentalists' challenge: to build systems so tight, so loss-free, that the loopholes are mathematically impossible. Theorists calculate that closing the loophole requires an experimental system with at least 83 percent efficiency—no more than 17 percent photon loss.

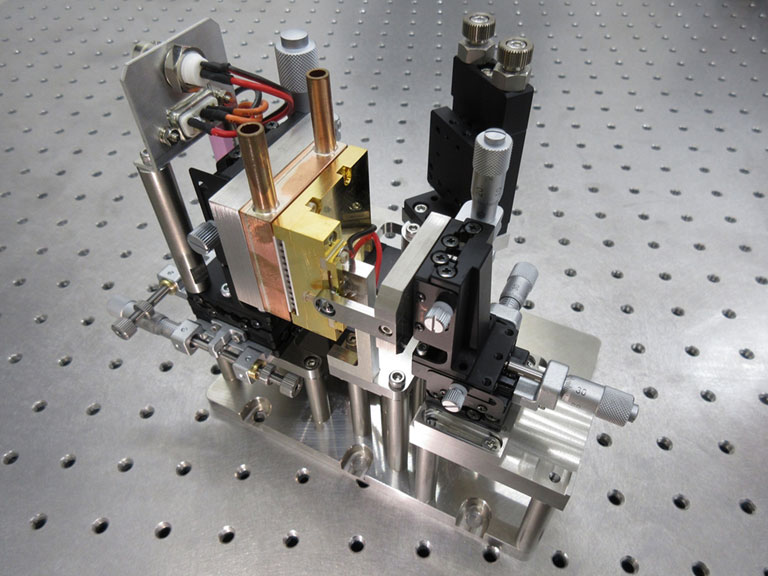

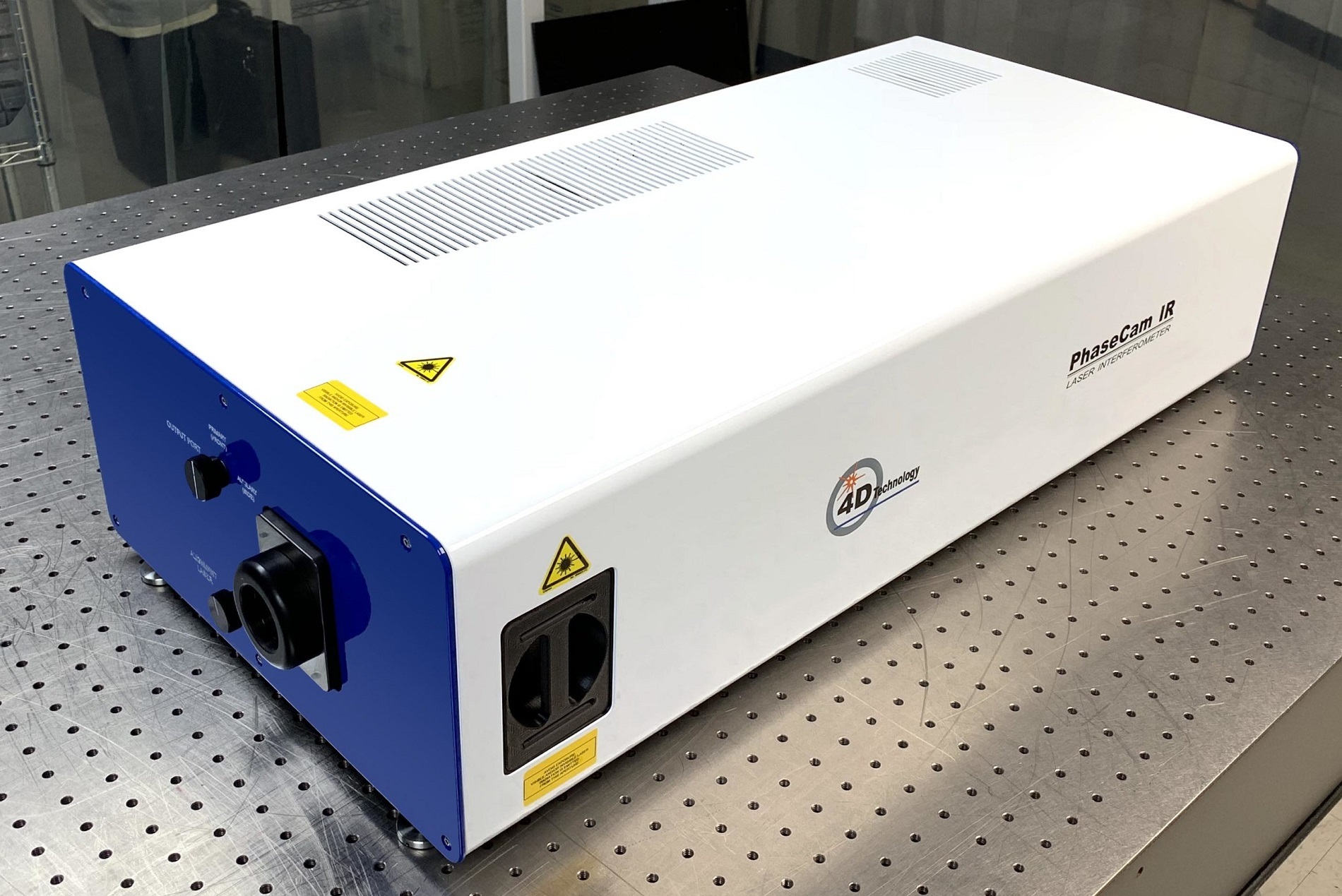

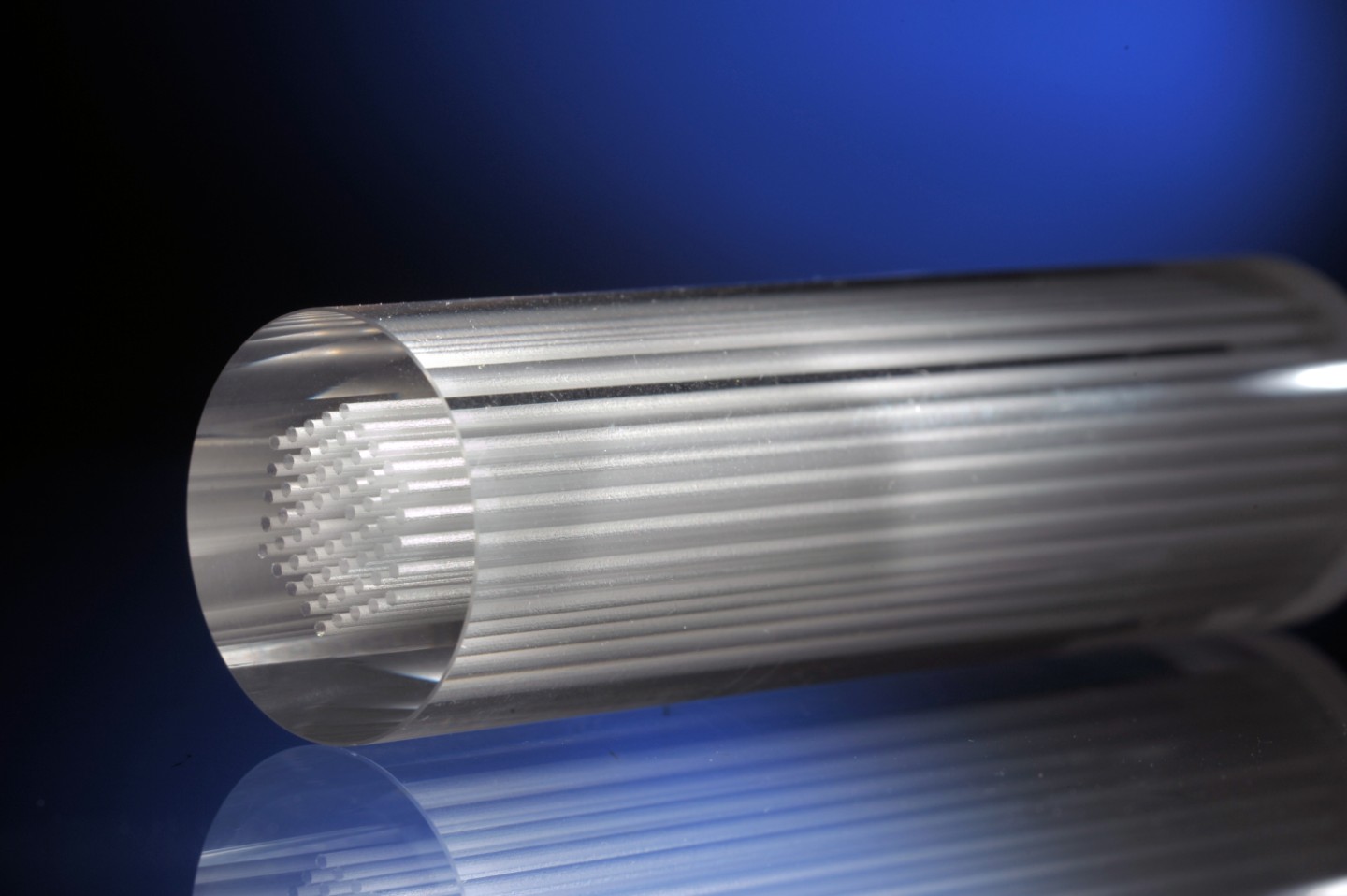

"It's really hard to do this," says Migdall, "because it's not just the detector, it's everything from where the photons are created to where they're detected. Any loss in the optics is part of that." Fortunately, the team has a 99 percent efficient detector that was developed by NIST physicist Sae Woo Nam in 2010.**

Their latest work addresses that optical part of the chain. The team has (carefully) built a source of paired, single-mode photons with a symmetric efficiency of 84 percent. Single-mode means the photons are restricted to the simplest spatial beam shape required for a variety of quantum information applications. Symmetric means your chances of detecting either photon of the pair are equally good. The photon pairs here are not the entangled pairs of a Bell test, but rather a "heralding" system where the detection of one photon tells you that the other exists, and so can be measured. You'd need two of these systems, merged together without efficiency loss, to run a loophole-free Bell test. But it's a big step on the way.

It's also a big step for an entirely different problem, a trusted source of truly random numbers, says Migdall. Random numbers are essential to encryption and identity verification systems on the Internet, but true randomness is hard to come by. Quantum processes of the same sort tested by Bell's inequality would be one source of absolutely guaranteed, iron-clad randomness—if there is no hidden variable that makes them not random, and equally important, the no-hidden-variable means that the random number did not exist before you measured it, which makes it pretty hard for a spy or a cheater to steal.

"Nobody in the physics world believes we'll get a surprising result, but in the security world you have to believe that everything out there is malicious, that Mother Nature is malicious," says Migdall, "We have to prove quantum theory to those guys."

For more on that and the NIST random number "beacon," see "Truly Random Numbers — But Not by Chance" at www.nist.gov/pml/div684/random_numbers_bell_test.cfm. The Joint Quantum Institute is a collaboration of NIST, the University of Maryland and the Laboratory for Physical Sciences at UMD.

*M. Da Cunha Pereira, F.E Becerra, B.L Glebov, J. Fan, S.W. Nam and A. Migdall. Demonstrating highly symmetric single-mode, single-photon heralding efficiency in spontaneous parametric downconversion. Optics Letters, Vol. 38, Issue 10, pp. 1609-1611 (2013) DOI: 10.1364/OL.38.001609

**See the 2010 NIST news story, "NIST Detector Counts Photons With 99 Percent Efficiency" at www.nist.gov/pml/div686/detector_041310.cfm.